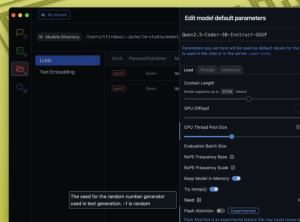

Running Large Language Models (LLMs) Locally with LM Studio

Running large language models (LLMs) locally with tools like LM Studio or Ollama has many advantages, including privacy, lower costs, and offline availability. However, these models can be resource-intensive and require proper optimization to run efficiently.